My biggest highlight of 2021 is that halfway through the year (in July) I left my software engineer position at MIT CSAIL for another software engineer position at Boston Dynamics. In the first part of this recap, I went through some of the research projects I contributed to before wrapping up at MIT. Here, I switch focus to my experience so far with Boston Dynamics.

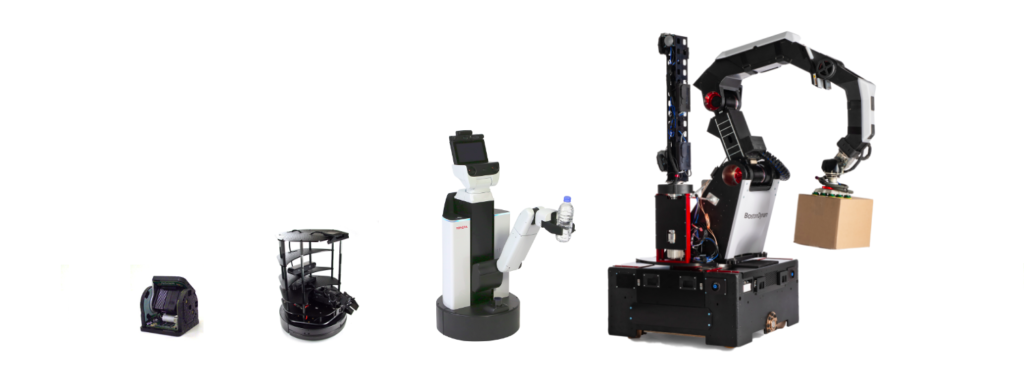

To start, I want to show a graphic I used for my interview at Boston Dynamics, which summarizes my career in robotics quite nicely.

What this shows is an evolution of absolute size (but also complexity) in the robot platforms I have worked with.

- First are the CKBot modular robots from the University of Pennsylvania, which I used for my Master’s thesis. These were my first experience with “real” hardware, although they were simple robots from a software standpoint, in that each robot consisted of a single servo motor and localization was done externally through Vicon markers.

- Second is the TurtleBot2, which represented my time at MathWorks acting as a robotics educator. The TurtleBot series is one of the quintessential platforms for education with the Robot Operating System (ROS), as it perfectly balances simplicity with capabilities. We used this platform within MathWorks in the context of “Getting started with ROS” videos, and in collaborations with RoboCup, namely the awesome RoboCup@Home Education initiative.

- Third is the Toyota Human Support Robot (HSR), which I first learned about while working with RoboCup (it was one of the RoboCup@Home standard platforms). I was so fascinated with how much effort went into a home service robot that I ended up moving to a full time position working with it at MIT CSAIL. You can learn more about my experiences with the HSR in last year’s recap post.

- The fourth robot is Stretch, which is Boston Dynamics’ new warehouse robot. As you may have put together, my current role is with Stretch and I will spend some time in this post talking about Stretch and what I do on that team.

I am a software engineer, so please take all I say with full knowledge of this bias. If you are more hardware-minded, some of these lessons may still apply, though! Throughout this post, I want to leave you with:

- How my nonlinear career trajectory eventually got me here after 10 years.

- What is similar and different between software engineering roles in academia vs. industry.

- What I think defines a software development environment for a successful commercial robot.

Introduction to Stretch

2021 was an interesting year for Boston Dynamics in that it announced an acquisition by Hyundai Motor Group and unveiled its Stretch warehouse robot. This relatively new addition of logistics applications to the more mature Spot and Atlas robots has meant a recent boom in hiring, and I am thrilled to have been part of that in joining the Stretch team in July 2021.

The official Stretch website mentions that Stretch can “unload trucks” and “build pallets”. The key thing to note is that Stretch is designed to manipulate boxes in warehouse environments, with the goal of automating tedious work and elevating human workers to the role of supervising robots as tools. If you want more details about truck unloading with Stretch, Evan Ackerman recently wrote an excellent IEEE Spectrum article.

Because Stretch is a relatively compact mobile robot (by warehouse standards) with roughly the footprint of a pallet, it is an important design point that an operator can drive Stretch to its destination — whether it’s a truck in a loading dock or pallet racking — hit “go”, and oversee several robots executing autonomously, stopping only when flagged for interventions. In other words, Stretch is a robotic system that fits into an existing warehouse, rather than one requiring significant warehouse redesign. There are, of course, merits to both approaches, but Stretch is designed with the previous one in mind.

At its core, Stretch is a mobile manipulator consisting of an omnidirectional mobile base and a 7 degree-of-freedom arm. The end effector is a large suction gripper optimized to manipulate boxes. Additionally, there is a “perception mast” sticking out of the arm’s base. This consists of two RGB-D cameras and a yaw actuator to aim the cameras relative to the arm for various vision tasks. There are also lidars on the base.

The Stretch Behavior Role

So… designing this highly capable mobile manipulator platform from scratch and making sure it’s a viable product is no easy task. Unsurprisingly, there are lots of people at Boston Dynamics making this happen; namely, entire teams focused on hardware, software, business development, and so on.

My small part in Stretch falls under software engineering, where I am on a team focused on behavior. This is a super general term, but one that is dear to me in that it denotes the highest level of programming a robot to do things. If you want a refresher on all the terminology denoting the system components of a robot, and my preference for being at such a high level, refer to my Anatomy of a Robotic System post.

I see my team’s role within the Stretch software ecosystem as follows:

- Controls team = proprioception: They get a robot from the hardware folks and make sure that all its controllable things (wheels, arm, perception mast, gripper, etc.) work correctly and safely.

- Perception team = exteroception: They have access to the cameras, lidars, etc. and need to figure out how to make sense of the world around Stretch. For example: Where are the boxes that need to be picked up? Where are the truck walls?

- Behavior team = reasoning: Having both self- and external awareness, which in software manifests itself as A LOT of data structures, we need to use this information to make the robot execute its task autonomously. For example: Given knowledge of a specific box we have to pick off a truck and place on a conveyor, what is the best grasping strategy so we don’t drop the box or hit a wall along the way?

Planning for autonomous behaviors from a specific world state is only one part of our team’s role. I like to think of this as the “nominal” behavior of the robot. In a perfect world with perfect sensing and perfect algorithms, our robot should in theory be able to deliberate about some action (like moving a box), and it will just work when executed. This is, of course, not how the real world works.

As a result, a lot of our job involves making the robot behavior robust. Through software abstractions for defining behavior (as an example, see my post on behavior trees) we can build recovery strategies for all kinds of failures. The key goal is that the robot can, and will, make mistakes, but it should know how to autonomously recover from those mistakes and continue running with minimal supervision. And finally, if there truly is a situation where the robot is stuck, it should communicate this to an operator that can manually intervene. This “robustifying” effort to get Stretch to run as long as possible without intervention is as challenging as it is gratifying to get right.

For example, let’s say we tried to pick up a box and we sensed that the gripper never got suction on it.

- Should we try again?

- With the same grasping strategy or a different one?

- How many times should we try again before giving up?

- What does “giving up” mean? Do we assume there is no box there, or there is an obstacle, and move on to the next box? Or should we take the extra time to rescan for boxes in hope we clear away “bad” measurements?

- … and how long should we let the robot give up and retry before notifying someone?

For the rest of this post, I will take a step back and discuss how my career path so far eventually got me the skills needed to work on Stretch, and how this role differs from my previous jobs which have all been in some way more academic.

The Nonlinear Path to Gaining Skills

In graduate school, my technical focus had stayed away from some now indispensable areas like computer vision and machine learning (certainly the neural networks part of it) and from virtually any use of C++ and ROS. For several years after that, I was exclusively working with MATLAB and Simulink for the purposes of controls and physical simulation. It wasn’t until I started my robotics role at MathWorks approximately 6 years out of school that I wanted to get myself back into the robotics game.

This role was a good way to get out of my comfort zone in a gradual and almost self-paced approach. The central part of this job involved talking to student teams in robotics competitions (mainly RoboCup) about the software tools they were using, and this market research was crucial in honing into what I had been missing out on for the last half decade. In parallel, the fact that I was necessarily making MATLAB/Simulink education material meant that I was in my comfort zone as far as software tools and I had a lot of energy on the side to devote to learning on the side. Through a combination of the Udacity Robotics Software Engineer nanodegree (which MathWorks graciously funded) and some other deep dives, like Andrew Ng’s Coursera specialization on deep learning, I was able to get a decent Python/C++ refresher and a lot of practice with ROS. It got me comfortable enough to borrow robots from the development team and start messing around without doing a whole lot besides treating MATLAB/Simulink as a ROS node.

The combination of these skills are what led me to move to MIT CSAIL to work specifically on a ROS enabled robot and now really get my hands dirty with some of the technologies whose surfaces I had previously scratched. I got to implement my own ROS packages/nodes, train my own neural networks for language processing/object recognition, and most importantly mess around with behavior abstractions (behavior trees) and task and motion planners (PDDLStream). Again, refer to last year’s recap post for more info.

With all this experience, plus the additional MIT projects that really pushed my C++ chops beyond “yeah, I modified a few lines of code”, it was now enough to try a move to robotics industry. Don’t get me wrong; I still had a lot of preparation to do, including some refresher coursework and the usual coding exercises. I highly recommend the Beginning C++ Programming course on Udemy to get started, then a lot of hands-on practice sprinkled with tips and tricks from the CppCon YouTube channel.

So what’s the takeaway here? That a nonlinear trajectory isn’t necessarily a bad thing and you can always get back into the game. Yes, there are people who end up at places like Boston Dynamics right out of school, and that’s great! However, I consider myself fortunate to have a nice scaffolded career progression that eventually got me here. If you have a similar goal to get into a top robotics company, know that your life is far from over if it’s not something you attain right away. Always keep learning!

Robotics Education vs. Research vs. Industry

Going back to the “evolution” graphic at the start of this post, it hasn’t just been the complexity of the robot that has changed over time. Actually, the key differences between my previous roles and the current one has little to do with the hard technical aspects, and much more with the 3 Ps: people, process, and product.

- People: Who is developing the robot?

- Process: How is the robot developed, tested, and maintained?

- Product: Who is the robot for? What problem is it solving?

In the context of educational demos at MathWorks, I was on my own developing examples, and the primary focus was to showcase software tools. The examples and videos could therefore be adapted to ensure two primary goals: First, that they are a gentle introduction to some technical topic, so the example is not overly complex but also not overly simplistic. And secondly, that the example fits with the strengths and capabilities of the software itself.

Moving towards the MIT role, the focus was exposition of novel research topics. The robot had to possess basic capabilities for vision, navigation, manipulation, speech, etc. but they still only had to be good enough to fit within a well-scoped demo. It didn’t matter so much that the vision system was good, for example, but rather that it was good enough to convince a practitioner that they could use their own (and presumably better designed) vision system and fit the novel research bits on top of that. So in some way, we still had the luxury of simplifying things that were less important for the research goals.

At Boston Dynamics, we work on a robot whose goal is to be placed in any warehouse and perform tasks around the manipulation of boxes like unloading a truck or building pallets. Sure, there are some assumptions we can make and some constraints we can enforce on the environments that we can put Stretch in. However, compared to previous roles I am now thinking much more about robustness and generality. As described earlier, this translates to A LOT of testing and A LOT more people to get the job done. The development process is therefore much more front-loaded with regular and thorough testing, although we certainly still retain aspects of sprinting towards a deadline.

So how are things different when suddenly you’ve gone from doing your own educational videos, to collaborating with a handful of people on a paper, to being in a team of dozens of people delivering a product-quality warehouse robot? Suddenly, what you think is an innocuous code change or quick fix ends up causing massive ripples throughout the code base. You have to take a step back and really think about what you’re doing, which involves having a much more rigorous mentality about your code.

Here are some themes I have identified which make all the difference:

1. Problem-Focused Design

You’re not focused on showing off a tool. You’re not focused on showing off, say, a novel application of neural networks towards a specific task. You have to solve an actual problem (for example, how to autonomously unload a truck full of boxes) and use whatever tool is at your disposal to get it done. I think wiring your brain early on to think about problems rather than tools or technologies is extremely important and will make you an overall better engineer regardless of you being still in school, in research, or in industry.

2. Specialized Teams

In a smaller robotics role, you may be buying hardware off the shelf, or doing a little bit of everything to cobble a simple system. For example, hobbyists learn a tremendous amount from building their own robot but there is only so much a single person can do. Having specialized teams for hardware (mechanical, electrical, manufacturing, etc.) and software (IT, DevOps, and various front-end applications such as controls, planning, and perception) makes all the difference in how much can get done… even if it sometimes feels like a lot of red tape compared to hacking together your own solution and shipping a personal repository.

3. Data, Data, and More Data

The biggest surprise I’ve had at Boston Dynamics so far has been the sheer importance of logging and analyzing EVERYTHING. In previous experiences debugging robot issues, the code was often small enough that you could sort of figure out where things went wrong. When you run Stretch and suddenly it does some weird thing like drive back and forth infinitely, how do you figure out what caused it? We have great data logging systems in place where you can access the robot’s view of the world and hone in on what was happening anywhere in the code. You can kind of see this in the Inside the lab: How does Atlas work? video.

I want to reiterate that data logging and playback becomes increasingly crucial as you’re dealing with a complex system. Having someone run the robot and giving you access to a giant data dump for offline analysis is easily worth the effort if you or your organization have the time to sink into tooling. Of course, if you’re working with an off-the-shelf system like ROS, you can rely on awesome tools like rosbag and Rviz to do exactly this with much less tools development effort. The point still stands: data logging and analysis is key.

4. Testing of All Varieties

Code can get very complicated. I am a big fan of the adage in the Software Engineering at Google book that software engineering ≠ programming. A big part of this is the need for testing code vs. simply cranking out buggy features at extreme velocity. Especially for a robot like Stretch which runs quite a lot of code, it is virtually impossible to develop a new feature and expect it to immediately work on the hardware.

Unit testing: As you develop new code, you should check whether that piece of code works in standalone fashion before integrating it to the rest of the code. This typically means defining a set of test cases and expected behavior to check against. You can look at my Testing Python Code post for an example, noting that every language worth its salt has similar unit testing frameworks in place.

Simulation testing: Simulation is extremely useful when used correctly, but also extremely easy to misuse. Thanks to my days at MathWorks, the model-based design paradigm got drilled into my head, but more importantly I consider myself to have developed a good intuition for when simulation is being used appropriately. At Boston Dynamics, we are fortunate to have a good platform where we can set up simulated worlds and check that the robot has performed the intended tasks through scripts that check the state of the robot and the world at key instances. For example, “verify that by the time the robot thinks it’s done unloading the truck, that 60 boxes were placed on the conveyor without falling”.

Hardware testing: At the end of the day, you can’t replace actual on-robot testing. However, if you did your job right, the unit and simulation tests will drastically reduce the time you spend breaking the hardware; that is, you can gain some confidence about the safety of the code as vetted by unit tests and simulation. At Boston Dynamics, we ensure that code is run often on the robot hardware so surprises come up as soon as possible. By going through a regularly scheduled rotation of standard robot tests, we can discover new issues that were not caught by other tests. These may be things that are unique to the real world (like sources of noise that do not exist in simulation), or opportunities to add new unit and/or simulation test cases to catch this earlier on in the future.

5. Mature Software Platform

Related to the previous point, it is great to have testing, but it’s also necessary to have frameworks in place to do the testing effectively. As with any professional software development environment, this usually involves using source control (such as Git) and having a full continuous integration / continuous development (CI/CD) pipeline that automates a lot of the testing. I don’t claim to be an expert on this, as it is not my focus area, but in my Continuous Integration post I show a simple example.

A new hire on my team recently told me they had used Git before, but never had to worry about branches. What they meant to say was that by working on personal projects there was never a need to worry about conflict resolution or tracing the source of failures. Similarly, as I have moved from my earlier jobs to my current one, I have seen the code complexity increase by virtue of problem complexity: More people, more features, and more lines of code means more branches, more conflicts, and more bugs that need to be caught early on. It be like that sometimes.

With a proper CI/CD pipeline, you ensure that even when code conflicts are resolved and “it works on your machine”, you are not allowed to push these changes until they are verified. This usually means running through an automated suite of tests that checks whether your changes broke anything elsewhere in the code base. Without the necessary tests passing on your branch, the system will prevent you from propagating these breaking changes to your colleagues until you have resolved them. From personal experience, the number of seemingly unrelated things I have broken that were caught by CI/CD are astonishing, and without these checks in place our robots would quickly descend into chaos.

Conclusion

At the time of writing this, I haven’t even hit my 6-month mark working on Stretch behavior at Boston Dynamics. However, I can say with certainty that I have learned so much already, that I have a lot more to learn, and that it’s extremely motivating to work in a team of very capable people and seeing your collective effort on a real robot moving real boxes.

These were my 4 professional goals from last year’s post:

- More work on home service robotics: This transformed to “more work on warehouse robotics”, but I am fine with that! Both applications are interesting and there is a lot of technological overlap between these areas.

- Variety of projects: Between the additional projects at MIT and the completely new job, I think this goal was far exceeded!

- Hands-on with C++: This was also met, as C++ is the main development language here at Boston Dynamics (and generally, on most commercial robots). I am so much more comfortable with it than I was a year ago, and will continue to use it going forward.

- Hands-on with ROS 2: This one did not gain any traction, so let’s try again this year?

So what are my goals for 2022?

- Become a productive Stretch developer at Boston Dynamics: I’m still new to my job, and there is a lot more to learn. I hope to transition from kind of getting the hang of things to contributing significantly to the behavior suite on Stretch. It may be a while until you all see the effects of that, but it will happen.

- Hands-on with ROS 2: Maybe this year I’ll actually spend some time tinkering with this in a real capacity? The great thing is the longer I wait, the closer I get to experience ROS 1 feature parity and resolution of bugs and usability issues.

- Post more technical blogs: I can’t directly post about my work at Boston Dynamics beyond an overview like this one, so I’d like to find some other technical topics to share with you all. Whether it’s on programming (C++ is sorely lacking on here), frameworks like ROS 2, or other technical topics in the behavior area (task and motion planning, finite-state machines, etc.), I have some ideas in stock.

Thank you for reading my 2021 recap posts, and let’s keep connected in 2022. Your ideas for new material on here are always appreciated, and I look forward to hearing from many of you.

One thought on “2021 In Review, Part 2: A Career Stretch”