In this post I will introduce Natural Language Processing (NLP) at a high level.

This is adapted from a presentation I created for RoboCup@Home Education — a great initiative I began supporting a few years ago thanks to my former job at MathWorks.

If reading long blog posts is not your style, you can find the video below and access my GitHub repo for the code and slides.

What is NLP?

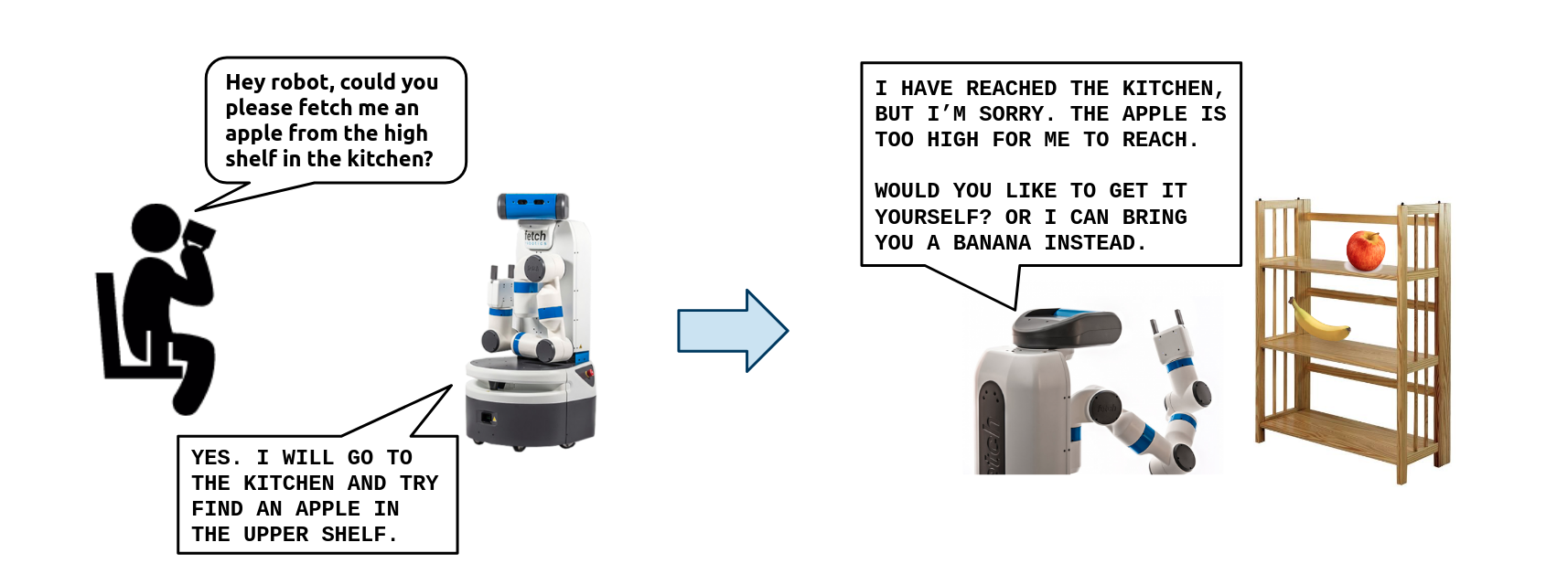

NLP is a branch of artificial intelligence dealing with communication between humans and artificially intelligent agents. Since this is foremost a robotics blog, let’s suppose our agent is a robot.

The “natural” part refers to how we communicate with the robot — in an ideal world, we would interact with robots exactly the same way we would communicate with humans. In contrast, “unnatural” languages might be a programming language that lets us send commands or monitor sensor values, or a monitor with messages, graphs, and blinking lights that would let us interpret the robot’s status. Basically, you need some expertise with that particular system to be productive, whereas with natural language anyone could interact with the robot. I think this is the main motivation for NLP in robotics.

Communication is verbal and non-verbal, and technology exists to deal with both. Let’s simplify the problem by assuming that our robot will strictly deal with verbal communication. We can therefore break down a communication problem into the following steps:

- Speech Recognition: Processing raw audio from a human into a verbal representation (words)

- Natural Language Understanding (NLU): Extracting information, or meaning, from words.

- Natural Language Generation / Text-To-Speech (TTS): Synthesizing words and/or audio to play back to a human.

Icons made by Freepik from www.flaticon.com

To say a bit more about nonverbal communication: Imagine that a robot could interpret the state of a human directly from audio or from other modalities like vision. If the human has their arms crossed, is frowning, and/or is speaking in a harsh tone, this may imply they are angry regardless of the words being spoken (which could actually be contradictory). Similarly, a robot could infer that the appropriate reaction to an angry person is to synthesize a response that is calming or apologetic, as might be the case for a service robot. In a different application where the robot assumes a less subservient role, it may instead “take offense” and scold the human for being rude. Communication is complicated and robots don’t make it any less so.

NLP, like most other branches of AI, can consist of a combination of rule-based and statistical components. Rule-based systems require manually programmed instructions whereas statistical systems learn a model from data. These have their advantages and disadvantages, which we will discuss throughout the rest of this post.

Rule-Based NLP

Rule-based systems are constructed manually, meaning a programmer must put together a known set of rules, knowledge, and logic to process language. This has the advantage of being completely deterministic and interpretable — unless we introduced a bug in implementation, we know the exact output the system will produce given an input. However, language is imperfect, fluid, and can have more exceptions than rules… so it’s extremely time-consuming for these types of systems to generalize to all the scenarios one might expect in natural conversation (i.e., infinite scenarios). Thus, encoding rules is most often practical for simple, constrained cases and not so much for general-purpose communication.

One of the most famous rule-based systems is Cyc, which has taken developers a tremendous amount of time and effort to build a knowledge base and rule set. For a quick introduction (and marketing pitch) to Cyc, check out this video.

Some of the simplest rule-based methods for processing text might accept text that goes through a pipeline similar to the one in the diagram below. In this example, suppose we want a robot to respond to various types of commands involving fruit. After some basic preprocessing of the text, we can match keywords in the sentence to a knowledge base of known fruits (e.g., apple) and actions to perform on those fruits (e.g., get). In most languages, there are several words to describe the same action, so we can encode some robustness to our system by also maintaining synonyms in our knowledge base (e.g., bring, fetch) — or even anticipating common mistakes (e.g., birng).

Building a basic rule-based system can be relatively easy and effective for highly focused tasks — and you can do this more or less with core functionality in any programming language. However, I hope you can see that maintaining these systems can become a tremendous effort for any sufficiently complex task — something that the Cyc developers claim to have been tending to for 30 years!

The more popular (and admittedly more interesting) body of rule-based NLP deals with applying linguistics to computational problems. There are many standard tools — most notably NLTK and spaCy in Python — to perform these kinds of tasks. Following the example pipeline in the diagram below, we can extract some common themes:

- Preprocessing: The conversion of a document of raw text to a list of sentences, each of which is split into a list of tokens (typically words and punctuation).

- Part-of-speech (POS) tagging: A common step in various NLP applications. Tagging sentences with their parts of speech can help a system extract context from a sentence. Refer to this page to understand what POS tags such as “DT”, “NN”, and “VB” mean. Spoiler alert: POS tagging is typically not rule-based!

- Extracting information: Once each token in a sentence has been tagged, we can apply a grammar to break the sentence into higher-level elements such as phrases — e.g. noun phrases or verb phrases. Grouping related elements of a sentence and forming a parse tree, AI systems can more easily extract useful information such as “what is the subject/object?” (named entity recognition) or “what does ‘it’ refer to in the third sentence?” (coreference resolution).

Source https://www.nltk.org/book/ch07.html

You can learn more about this example from Chapter 7 of the NLTK book. Let me also reiterate that some parts of this system are not rule-based — namely the part-of-speech (POS) tagging, which is incredibly difficult to do with rules and is today almost uniformly done using statistical approaches learned from data (see why at this link). This leads us into the next section on statistical NLP.

Statistical NLP

I’ve hopefully made it clear by now that language is messy. This makes rule-based systems notoriously brittle especially if you expect them to deploy them around the world, where users are expected to bring with them a huge set of linguistic idiosyncrasies (for example, using big words for no reason).

The solution that has changed NLP over the last few decades is to use statistics — in other words, instead of directly engineering and implementing rules, have the AI system learn its own internal model given a massive corpus* of data. Machine learning today is at the core of modern NLP in all its forms: unsupervised, supervised, and reinforcement learning.

* Corpus (plural: corpora) is Latin for “body”

…as in, “a body of text”.

Such models, of course, deal with numeric data and not raw text or speech. Much of this section will be devoted to answering the question: how do we encode a sentence to plug into machine learning models?

Traditional Methods: Before Deep Learning

Recall that much of practical machine learning is making sure that features extracted from the physical world are meaningful for a statistical model. Here I will try to summarize the approaches historically used to get features from text. Near the end of the post I will say a little about speech as well.

One-hot encoding: This is a simple concept — suppose your dataset consists of 10 distinct words. Our feature vector can then be a 10-dimensional vector, where each element is 0 if the word is not in the sentence and 1 if the word is in the sentence. Of course, real vocabularies are much bigger than 10 words, so this often leads to sparse feature vectors which can be challenging for machine learning. It also doesn’t take into account the ordering (unless you roll each word out as a sequence) or the word counts in a sentence.

Bag of Words: This approach is similar to one-hot encoding except that the feature vector how handles word counts as well. For example, in the sentence “the dog in the park”, the feature vector element corresponding to the word “the” would actually have a value of 2 instead of 1. Bag of words encoding has a few extra techniques named Tf-Idf which can also help with learning compared to one-hot encoding, which are explained in the slideshow below and in this Datacamp post by Avinash Navlani.

n-grams: One-hot encoding and/or bag-of-words does not necessarily have to operate on words — you could do this with lower-level features like characters, or higher-level features like sequences of words. Imagine that instead of assigning a unique feature dimension to each word, you did this for every pair or triplet of consecutive words. These are known as bigrams and trigrams, respectively, and belong to a general class of n-grams for various values of n (trivially, 1-grams are just individual words). This type of encoding has been shown to help with learning because it honors the context in which words appear, but has the problem of being even more data-hungry because it’s more difficult to get a dataset that contains a diversity of n-grams vs. individual words. As with anything in AI, there is no free lunch.

In fact, clever uses of n-grams were the state-of-the art in text encoding, especially for sequence-based tasks like machine translation and text summarization. This was before deep learning based approaches took over in more recent years.

Unsupervised learning: On its own, unsupervised learning is used extract patterns from unlabeled data. However, unsupervised learning can also help us extract compressed feature representations from unlabeled data that are suitable inputs for supervised learning. Methods like principal component analysis (PCA) or topic modeling are common in this space.

Source: https://www.machinelearningplus.com/nlp/topic-modeling-visualization-how-to-present-results-lda-models/

Regardless of how you choose to encode your text, once you have a numerical representation for your text data you can train any type of model. Tools like scikit-learn in Python are helpful both for building the feature vectors and iterating through a variety of model types and training parameters (i.e., the “fun” part of machine learning).

Modern Methods: After Deep Learning

It took a lot of brain power to come up with smart ways to encode text into numerical representations for use with statistical models like Support Vector Machines (SVMs), but the field has evolved to tackle more complex problems that require more complex solutions. Here are the main issues with the “old school” of machine learning for NLP:

- Hand-engineered features are inefficient.

- Long and/or variable-sized sequences are challenging.

- Representational capacity of “shallow” models is limited.

This is where we get into the time period that neural networks took the world by storm. Deep learning has been effective in NLP for two main uses:

- Extracting lower-dimensional, dense embeddings from text representations

- Using novel model structures that can deal with long and/or variable-length text sequences, and have massive representational capacity

Word Embeddings

Many the text feature vector representations we discussed requires one extra dimension for each word / n-gram in the vocabulary. This means that feature vectors are sparse: that is, a typical sentence will have few nonzero elements.

Also, each word / n-gram is treated independently — the feature vector for e.g. “the big dog walks” vs. “the large hound walked” would look completely different and it’s up to our model to learn from data that these sentences mean almost the same thing… as well as learning whatever other problem we want the model to solve.

Learning text embeddings solves Issue #1: Hand-engineered features are inefficient.

The key principle here is splitting up the problem into two parts: First, we encode these giant word feature vectors, into a lower-dimensional, dense representation — also known as an embedding. Then, we use these embeddings as the input to our task-specific model.

Typically, this is done using supervised learning techniques that learn to predict words from their neighboring context. After training, we pull out the “encoder” part of the network and use it as the starting point for training a bigger model that solves our desired task.

There are many types of word embeddings, which roughly fall into two categories.

- Embedding single words — e.g., Word2Vec, GloVe, FastText

- Embedding word sequences — e.g., InferSent, ELMo

Many of the models below come with open, pretrained versions that we can use for research or practice. I have personally used the GloVe embeddings from Stanford in some of my work on NLP for service robotics. For an introduction to working with the GloVe embeddings, check out my example on GitHub. Lilian Weng from OpenAI also has a great blog post talking generally about text embeddings.

One final note: Learned embeddings do not have to be word embeddings, though they are the most common. You can also embed characters, phonemes, entire sentences, or really any other subdivision of text you can put through a machine learning model.

Neural Networks in NLP

Regardless of the features we use to encode our text, the simplest type of deep neural network — a multi-layer perceptron, or fully connected network — can often outperform some of the traditional models like logistic regression. This is because multiple layers with nonlinear activation functions in between, plus the sheer number of learnable parameters in neural networks, can add more representational power at the expense of requiring much more data (again, no free lunch).

Deep neural networks solve Issue #2: Representational capacity of “shallow” models is limited.

Many traditional models accept fixed-size data, which is why we collapsed sequences into a single vector that ignored the order of words (except n-grams, which kept some of that order around for short windows). But, n-grams are impractical beyond 4- or 5-grams because of combinatorial explosion on the feature vector size and the size of training data required to get good coverage of possible n-grams.

Word embeddings (or other similar text embeddings) get around the dimensionality issue, but not so much the variable-length issue. Unsurprisingly, natural language is full of variable-length sequences, so this was a big breakthrough that novel neural network architectures could handle. Namely, these are convolutional neural networks (CNNs) and recurrent neural networks (RNNs).

Deep neural networks solve Issue #3: Long and/or variable-sized sequences are challenging.

Specifically, RNNs were the state-of-the-art for NLP until around 2017. RNNs consist of individual recurrent units that share parameters, and can thus be chained to any length to handle sequences over time that have different lengths — and similarly, can be trained using backpropagation through time. In our case, we’re mostly dealing with variable-length sequences of word embedding vectors that must perform some output, such as classification or sequence generation. Go through the images below for some examples, or for more detail check out Andrej Karpathy’s blog post.

RNNs in their plain “vanilla” forms still have their own issues in dealing with long sequences of text, but many enhancements over the years sought to deal with these issues. Some important ones used often in practice are:

- Long-Short Term Memory (LSTM) units: Vanilla RNNs suffer from the problem of vanishing and exploding gradients over long sequences, which makes them difficult to train. Clever types of recurrent units such as LSTMs and Gated Recurrent Units (GRUs) have helped with this. I like this blog post as an introduction to LSTMs.

- Bidirectional RNNs: Regular RNNs go through a sequence in one direction (typically the forward direction). If you have access to the full input sequence ahead of time, it can help to chain two RNNs together — one passing forward and one passing backward through the sequence.

- Attention mechanisms: It can be challenging for a network to “remember” what happened earlier in its final encoding of the entire sequence, especially if that sequence is long. Even though LSTMs and bidirectional RNNs can address this to some extent, it’s far from perfect. Attention mechanisms augment RNNs with more learnable weights that perform some operation on the hidden states which mathematically create “shortcuts” from earlier content in the sequence to the output of the encoder (much like ResNets did for CNNs in computer vision). In the official machine learning jargon, these models can “attend” to specific context along the sequence by assigning higher weights to things that have been learned to have significant impact. Lilian Weng’s blog comes through yet again with great learning resources.

Unsurprisingly, all of the tweaks above have one common theme: More representational power at the expense of a bigger model with more learnable parameters. Have I said “no free lunch” already?

Attention in particular was shown to be extremely successful at helping to learn (and retain) context for longer sequences. It was so succesful, in fact, that scientists at Google Brain published a paper in 2017 titled “Attention Is All You Need” which introduced the Transformer network architecture.

Transformers are exclusively based on attention mechanisms — so no recurrent elements — with the exception of one “hack” which is to track on a positional encoding input to give the network some spatial information about the text. On the plus side, Transformers are highly parallelizable for training, which is harder for RNNs since outputs depend on previous inputs. However, Transformers are massive networks which means they are compute-hungry in every aspect. Yet another tradeoff!

Today, most state-of-the-art results on NLP benchmarks come from some flavor of Transformer network — one of the most recent being OpenAI’s GPT-3. A fantastic resource to learn more about Transformer networks is Jay Alammar’s blog post “The Illustrated Transformer”.

If you’re left yearning for more information on state-of-the-art applications of deep learning in NLP, I would strongly encourage going to nlpoverview.com.

As far as software tools, PyTorch and TensorFlow dominate this space. Specifically for Transformer networks, I would like to recommend the HuggingFace Transformers library, which works with both PyTorch and TensorFlow.

NLP Is More Than Text

In this last section I want to briefly revisit the non-text part of NLP, which includes audio (a huge part of language) as well as combining NLP with other sensing modalities such as vision, since this is how people actually communicate with each other … when they’re not texting or instant messaging, anyways.

NLP with Speech

Language at its core is speech and text, where text can only express a limited subset of what speech can. Going back to the introduction, NLP also encompasses speech recognition and speech synthesis. Just as we showed with text, there is a whole body of work around machine learning using audio.

To summarize in a few bullets, speech based NLP may consist of:

- Encoding audio into a numeric representation usable by machine learning models. Some common approaches include manually extracting features using signal processing techniques, discretizing an audio signal into a numeric sequence for use with RNNs, or converting an audio signal to a spectrogram for use with CNNs.

- Extracting information from audio. The biggest thing on everyone’s mind should be speech-to-text conversion, or speech recognition. However, you can also directly extract data from audio for applications like sentiment analysis, respiratory disease detection, and more.

- Synthesizing audio. Many artificially intelligent agents are created with the goal of pushing the boundary of how natural our interactions with machines truly are. Think of how commercial products have progressed from generating clearly robotic voices to voices that sound “human”, perhaps even with the ability to demonstrate various emotions to help express intent. There are other applications like vocal style transfer — which shockingly has uses outside of deepfakes, such as generating synthetic voices for people unable to speak.

Below are some Python based resources for NLP with speech. If you are interested take a look.

- The Ultimate Guide to Speech Recognition with Python

- How to Convert Text to Speech in Python

- Audio Data Analysis Using Deep Learning with Python [Part 1] [Part 2]

Multimodal NLP

Finally we get to combining NLP (whether speech, text, or both) with other sensing modalities such as vision. While this post may indicate otherwise, I’m especially interested in this richer form of human-machine interaction because I’m a roboticist and robots have all these sensors and actuators available that could — and should — be used to effectively communicate with humans.

Here are some interesting examples I found.

- This introductory blog post from DrivenData shows a benchmark approach for detecting hateful memes using machine learning on combined vision and text.

- Multimodal Speech Emotion Recognition Using Audio and Text, by David Yoon demonstrates how we can encode numeric features from both speech and text to train a machine learning emotion classifier. The key idea here is that both of these inputs can provide complementary insight into a human’s emotion.

- Vision-Language Navigation by Microsoft Research shows a reinforcement learning approach that combines of vision and text input to teach a simulated agent to navigate household scenes from moderately complex set of instructions.

In my own work at MIT CSAIL, our group adapted and integrated prior work on Leveraging Past References for Robust Language Grounding for service robotics (shout out to Mike Noseworthy!). This approach uses a combination of images with labeled object instances and natural language to identify specific objects in a scene. For our robot, we are able use a combination of rule-based systems and machine learning to translate a complex natural language command into

Conclusion

This post provided a broad, yet still extremely lengthy, overview of NLP. Although NLP has many application areas, I did put a slight spin on robotics because robots are objectively cool. If you disagree, this may not be the place for you.

My key takeaways are:

- NLP involves communication between humans and artificially intelligent agents, with the goal that the human should require little to no additional effort, knowledge, or expertise to communicate with the agent.

- Like with many other areas of AI, NLP system components can be based on rules or statistical methods — most notably machine learning.

- NLP is not just text processing, and not just verbal communication. Speech, vision, and other sensing modalities can augment the performance of AI systems because they provide additional information about the state of the world.

- Once you have a way to encode text (or audio, images, etc.) into a numerical representation, any machine learning approach can be applied to such representations. Machine learning has largely been successful in recent years because of a) clever ways to compress data from such sources in a practical manner and b) compute power allowing the use of deep neural networks as the model architecture of choice.

- Decoding language representations with the same encoding methods above is just as important if the desired task is to generate language rather than make numeric predictions, such as class probabilities.

To learn more, check out my YouTube presentation and GitHub repo with code and slides. If you would like me to dig deeper into any particular topic(s) for future posts, let me know in the comments or reach out directly.

One thought on “Introduction to Natural Language Processing”