In this post, I will go through some basic examples for managing your Python development environment using the standard tools in any recent Python 3 install. These tools are:

Why is environment management important? Let’s say you’ve installed Python on your system and you’re using that one installation for all your work.

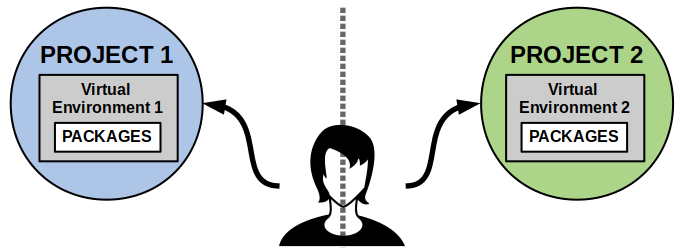

- Working on multiple projects: Now suppose you have several Python projects that partially use the same package(s). Maybe things worked on one project, but you needed to update package versions for another project to fix it… which ends up breaking the first project!

- Sharing with others: Someone else will likely have to run your code at some point. It’s important to make it easy for others to install the right packages and versions to reproduce your work.

- Reducing waste: Even if you did not run into package conflicts, now your monolithic system install of Python has more packages than any one project may require. Sure, things may still work if you install more than is needed, but it would be efficient to keep a minimal list of package dependencies if and when you or others need to do a clean setup of the project.

Icons made by Freepik from www.flaticon.com

Working with Virtual Environments

A virtual environment is an isolated runtime environment for Python. Inside a virtual environment, you are operating in a space where any Python packages you install will affect only that virtual environment, and similarly any packages installed outside the virtual environment will have no effect. The idea is that you can have multiple non-conflicting virtual environments in one system.

Icons made by Freepik from www.flaticon.com

The standard virtual environment tool included with Python 3 is venv.

To create a virtual environment named my-first-environment in a specific folder using venv, the command would look like this:

python3 -m venv /home/python-envs/my-first-environment

Then, to activate the virtual environment in a Linux Bash Shell, you would type the following.

source /home/python-envs/my-first-environment/bin/activate

If you’re in a Windows Command Prompt, it might look a little different.

C:\python-envs\Scripts\activate.bat

You can tell which environment you’re in because your shell will get a prompt in front of it with the name of the virtual environment.

If you want to know more about virtual environments, check out this great blog post from RealPython.com.

Installing Packages with pip

Now that you’re working inside a brand new virtual environment, you probably need to install some (or a bunch of) Python packages.

The official package manager in Python is pip.

For example, to install the NumPy package — best known for array manipulation and linear algebra — you can type:

pip install numpy

This will install the latest version of NumPy that is supported by your current Python version. More importantly, package management systems like pip will make sure that any dependencies (and their correct versions) will also get installed when you do this.

For packages installed from PyPI, you can always specify the version, or even limits on your versions as inequalities. Here are a few examples.

pip install numpy==1.17

pip install numpy>=1.18.1

pip install numpy<1.18

Once you have installed your package, one way to check its version is to read the standard output and see what version pip decided to grab. Another is by starting Python, importing the package, and printing its version number.

python

>>> import numpy

>>> numpy.__version__

'1.17.0'

I like testing this way because it confirms that the package will actually import when I start writing code. I would also recommend typing in a few commands from the package because you never know what unresolved dependencies could be creeping around. (Spoiler alert: I’ll later talk about automating this by writing unit tests).

Now try deactivate your virtual environment (literally type deactivate), start your system install of Python, and print the NumPy version again. What version do you have? Do you even have NumPy at all?

Aside: Python 2 and Python 3

If you’re using Ubuntu 18.04 or earlier — or really, any operating system released before mid-2020 — you may ask yourself another question: Did Python 2 or Python 3 start up when you typed python?

Working locally in a system with both Python 2 and Python 3 usually involves having to use the python/python3 or pip/pip3 commands respectively, and from personal experience this is really easy to mess up. With a virtual environment, typing python or pip will ensure you get the version you used when you created the environment.

Since Python 2 was officially sunset at the end of 2019, this hopefully won’t be a long-term issue, but there will be an inevitable delay in getting everything up to date through this big change — not just in package developers updating their software, but in you upgrading your system to the bleeding edge.

Aside: Where Do Packages Come From?

Stork icon made by Eucalyp from www.flaticon.com

When you install a package using pip, these packages are for the most part found:

- Online in the Python Package Index (PyPI)

- As packaged archives: the standard being wheels, or

.whlfiles - As source code: if you see a

setup.pyfile and you are asked to runpip install ., that’s a tell-tale sign.

As a Python user, you read those bullets list top-to-bottom. Ideally the package you want is online and it would be a hassle to do more work.

As a Python developer, you read the bullets bottom-to-top. Depending on how easily you want others to install your code vs. how much effort you want to put in, your source code can be shared as-is, packaged up as a wheel, or uploaded to PyPI.

Sharing Your Package Requirements with Others

Once you’re ready to share your code with others, it’s important to capture the exact list and version of Python packages needed to run that code. For now, let’s assume you’re not developing any packages of your own, but rather sharing code that requires a collection of packages to run correctly.

pip has a freeze command that grabs all the packages and package versions and puts them in a text file. The syntax is fairly straightforward:

pip freeze > my-requirements.txt

Just remember to run this command while inside your virtual environment!

Now others can take this text file, create their own virtual environment, and install the exact set of packages that you used. The command on their side would be:

pip install -r my-requirements.txt

There are many options for pip freeze, and you should check out the reference page. However, I want to talk about the --local (-l) option. As a ROS developer, this is super handy. It is common practice to automatically configure the ROS environment every time you start a shell. For ROS, this partially includes adding packages to the Python path. Therefore, if you run pip freeze while the ROS environment is sourced, you will find a lot of extraneous ROS related packages in your output file. With the -l flag, you only get the packages that you have installed locally in the virtual environment itself.

Icons made by Freepik from www.flaticon.com

Again, if you are developing your own Python packages, there are other ways to specify dependencies. Here is a quick reference from the Python documentation if you’re interested.

Other Tools You May Encounter

The Python ecosystem is full of useful tools for environment management outside the standard ones that I went through. Below is a short list of some common ones you may run into — and even prefer!

virtualenv

This is fairly similar to venv, but is not officially included as part of your Python installation. It is advertised with better performance and more features than venv, so you may find it worthwhile to install that extra bit of software.

pyenv and pyenv-virtualenv

The biggest thing pyenv brings is the ability to create environments for different Python versions, which can help when working on multiple projects or testing across versions. There’s a blog post on RealPython.com that runs through pyenv in great detail.

conda

Conda integrates package and environment management in one tool. Like the pyenv ecosystem, it supports virtual environments, using multiple versions of Python, and extends to other programming languages besides Python. So it’s a more general-purpose tool to manage your development environment. In my opinion, the overall syntax is a lot nicer because it’s an integrated set of tools.

The biggest downside of Conda is that having its own package manager means it does not use pip — so you’ll find Conda houses a subset of what is available on PyPI. If you’re comfortable looking for other ways to install certain packages, or installing pip inside a Conda environment, you get a lot of other benefits.

Summary

Hopefully you now have a basic grasp of why it’s important to manage your Python development environment. It’s good for you if you’re working on multiple projects, and it’s good for others who are trying to run your code.

In most, if not all, of the Python repositories I plan to release, I will be providing requirements files (or something equivalent) for these same reasons. I need to not mess up my work setup, and as a bonus you will be able run my code — and you better do it from a virtual environment!

One final note: In reality, giving somebody a list of Python packages is useful, but there are so many non-Python differences across machines that could easily prevent your code from working as intended. Especially for very complex projects, you may be better off managing the entire development environment. This is where virtualization tools (like virtual machines) or containerization tools (like Docker) come in handy… but that’s a completely different topic.

Until next time!

great blog, thanks for sharing all the details. I would like to point out that uv is getting alot popular as well

and it is worth checking it out https://docs.astral.sh/uv/